Avoiding the Hidden Hazards: Navigating Non-Obvious Pitfalls in ML on iOS

Do you need ML?

Machine learning is excellent at spotting patterns. If you manage to collect a clean dataset for your task, it’s usually only a matter of time before you’re able to build an ML model with superhuman performance. This is especially true in classic tasks like classification, regression, and anomaly detection.

When you are ready to solve some of your business problems with ML, you must consider where your ML models will run. For some, it makes sense to run a server infrastructure. This has the benefit of keeping your ML models private, so it’s harder for competitors to catch up. On top of that, servers can run a wider variety of models. For example, GPT models (made famous with ChatGPT) currently require modern GPUs, so consumer devices are out of the question. On the other hand, maintaining your infrastructure is quite costly, and if a consumer device can run your model, why pay more? Additionally, there may also be privacy concerns where you cannot send user data to a remote server for processing.

However, let’s assume it makes sense to use your customers’ iOS devices to run an ML model. What could go wrong?

Platform limitations

Memory limits

iOS devices have far less available video memory than their desktop counterparts. For example, the recent Nvidia RTX 4080 Ti has 20 GB of available memory. iPhones, on the other hand, have video memory shared with the rest of the RAM in what they call “unified memory.” For reference, the iPhone 14 Pro has 6 GB of RAM. Moreover, if you allocate more than half the memory, iOS is very likely to kill the app to make sure the operating system stays responsive. This means you can only count on having 2-3 GB of available memory for neural network inference.

Researchers typically train their models to optimize accuracy over memory usage. However, there is also research available on ways to optimize for speed and memory footprint, so you can either look for less demanding models or train one yourself.

Network layers (operations) support

Most ML and neural networks come from well-known deep learning frameworks and are then converted to CoreML models with Core ML Tools. CoreML is an inference engine written by Apple that can run various models on Apple devices. The layers are well-optimized for the hardware and the list of supported layers is quite long, so this is an excellent starting point. However, other options like Tensorflow Lite are also available.

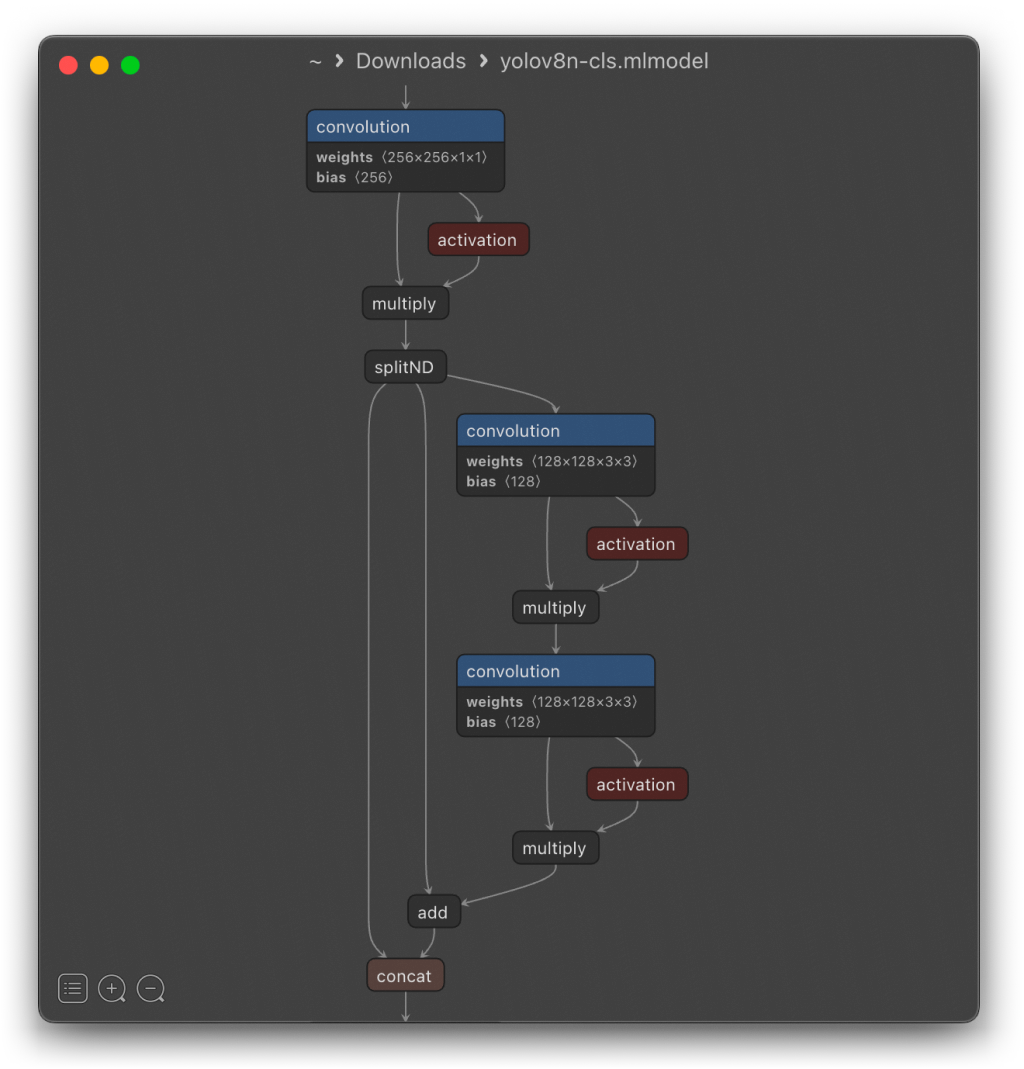

The best way to see what’s possible with CoreML is to look at some already converted models using viewers like Netron. Apple lists some of the officially supported models, but there are community-driven model zoos as well. The full list of supported operations is constantly changing, so looking at Core ML Tools source code can be helpful as a starting point. For example, if you wish to convert a PyTorch model you can try to find the necessary layer here.

Additionally, certain new architectures may contain hand-written CUDA code for some of the layers. In such situations, you cannot expect CoreML to provide a pre-defined layer. Nevertheless, you can provide your own implementation if you have a skilled engineer familiar with writing GPU code.

Overall, the best advice here is to try converting your model to CoreML early, even before training it. If you have a model that wasn’t converted right away, it’s possible to modify the neural network definition in your DL framework or Core ML Tools converter source code to generate a valid CoreML model without the need to write a custom layer for CoreML inference.

Validation

Inference engine bugs

There is no way to test every possible combination of layers, so the inference engine will always have some bugs. For example, it’s common to see dilated convolutions use way too much memory with CoreML, likely indicating a badly written implementation with a large kernel padded with zeros. Another common bug is incorrect model output for some model architectures.

In this case, the order of operations may factor in. It’s possible to get incorrect results depending on whether activation with convolution or the residual connection comes first. The only real way to guarantee that everything is working properly is to take your model, run it on the intended device and compare the result with a desktop version. For this test, it’s helpful to have at least a semi-trained model available, otherwise, the numeric error can accumulate for badly randomly initialized models. Even though the final trained model will work fine, the results can be quite different between the device and the desktop for a randomly initialized model.

Precision loss

iPhone uses half-precision accuracy extensively for inference. While some models do not have any noticeable accuracy degradation due to fewer bits in floating point representation, other models may suffer. You can approximate the precision loss by evaluating your model on the desktop with half-precision and computing a test metric for your model. An even better method is to run it on an actual device to find out if the model is as accurate as intended.

Profiling

Different iPhone models have varied hardware capabilities. The latest ones have improved Neural Engine processing units that can elevate the overall performance significantly. They are optimized for certain operations, and CoreML is able to intelligently distribute work between CPU, GPU, and Neural Engine. Apple GPUs have also improved over time, so it’s normal to see fluctuating performances across different iPhone models. It’s a good idea to test your models on minimally supported devices to ensure maximum compatibility and acceptable performance for older devices.

It’s also worth mentioning that CoreML can optimize away some of the intermediate layers and computations in-place, which can drastically improve performance. Another factor to consider is that sometimes, a model that performs worse on a desktop may actually do inference faster on iOS. This means it’s worthwhile to spend some time experimenting with different architectures.

For even more optimization, Xcode has a nice Instruments tool with a template just for CoreML models that can give a more thorough insight into what’s slowing down your model inference.

Conclusion

Nobody can foresee all of the possible pitfalls when developing ML models for iOS. However, there are some mistakes that can be avoided if you know what to look for. Start converting, validating, and profiling your ML models early to make sure that your model will work correctly and fit your business requirements, and follow the tips outlined above to ensure success as quickly as possible.

Originally published on Unite.AI